The Jun 3 - Jun 9 week started with TopCoder Open 2019 Round 2B, the second and last chance to qualify for round 3 (

problems,

results, top 5 on the left,

parallel round results). The problems were a bit harder than in Round 2A, and only two participants could solve the hard one: Deretin and Kostroma. Congratulations to both, and to all advancers to Round 3!

Codeforces Round 564 followed on Friday (

problems,

results, top 5 on the left,

analysis). The last two problems turned out to be unsolvable during the round, and CauchySheep was the fastest on the first five. Congratulations on the victory!

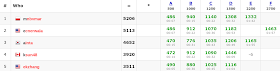

Finally, Google Code Jam Online Round 3 selected the top 25 that would travel to San Francisco for the onsite finals (

problems,

results, top 5 on the left). The hardest problem turned out to be unsolvable in this round as well, and the speed of solving the remaining problems was key. ACRushTC beat the second place by just 3 minutes, and the following gaps were even smaller. Congratulations to Tiancheng and to everybody who qualified for the onsite!

The easiest problem in this round required a bit of creativity: you are playing a game that starts with 10

12 coins arranged in a row. In one turn, each player must pick any 10

10 consecutive coins and remove them. The remaining coins are not shifted, therefore the coins that were on both sides of the removed segment do not become consecutive. The player who can't make a move loses. The first player always picks a valid move uniformly at random, and you are playing for the second player. Can you win at least 499 games out of 500?

In

my previous summary, I have mentioned quite a few problems. The first one was from TopCoder: 500 table tennis players are sitting in a row. Repeatedly, we do the following: pick two adjacent players uniformly at random, play a game between them, and eliminate the player who lost, until there is only one player left. Each player has a rating

ri, and the probability that the

i-th player wins against the

j-th player is

ri/(

ri+

rj). What is the probability that the given player wins the entire tournament?

The most straightforward dynamic programming would compute for each

l<=

t<=

r the probability that the

t-th player wins in a tournament where the players from

l-th to

r-th participate. This dynamic programming would have O(

n3) states though, and each transition would require iterating over the last non-winning player remaining, and the splitting point between the two segments that they beat, resulting in a complexity in the ballpark of O(

n5) which is too much.

The first observation is to notice that we have two basically independent tournaments happening to the left and to the right of the winning player, so we can compute the probabilities that the

t-th player wins in the [

l;

t] and in [

t;

r] segments and multiply them. Therefore we can afford to have a quadratic number of states: the probabilities that the first and the last player wins in each segment, and the running time of the entire solution becomes O(

n4).

In order to get a further speedup, we need to become a bit more explicit with the formulas. If we denote the probability that the

l-th player wins in the segment [

l;

r] as

pl,r, the probability that the

r-th player wins in that segment as

ql,r, and the probability that the

i-th player beats the

j-th player in a direct encounter as

wi,j, then our current solution can be viewed as:

pl,r=sum

x(sum

y(1/(

r-

l)*

pl,x*

qx+1,y*

py,r*

wl,y))

Where

y denotes the player we beat the last, and

x denotes the right boundary of the segment of players that we beat directly or indirectly before beating

y. Note that the value of

x does not actually depend on the outcomes of the games, just on which game is the last one: if we imagine that initially we have

n players and

n-1 "potential games" placed between them, then at every turn we take one potential game and remove it, so the order of games is just a permutation of

n-1 elements, and the value of

x is the last element of that permutation, so each possible value has the same probability of 1/(

n-1), which transforms to 1/(

r-

l) in our formula.

There are two magical things about this result:

- The fact that after we condition on a given x, the games happening in [l;x] and [x+1;r] segments are independent and random in the same sense as in the original problem, so that dynamic programming can work at all.

- The fact that the probability for any x is just 1/(r-l) and crucially does not depend on x enables the most exciting magic of this problem to happen, because we can now reorder our summation as

pl,r=sum

y(1/(

r-

l)*sum

x(

pl,x*

qx+1,y)*

py,r*

wl,y))

And notice that the inner sum on

x does not depend on

r, and can be computed as an additional dynamic programming table

ul,y and reused between different values of

r, thus reducing the overall running time to O(

n3). Had the probability depended on

x, we would not be able to collapse the inner summation, and the problem would be much less interesting.

The

official editorial does not mention this magical property at all, so I'm wondering if there's a less magical implied explanation there :)

The second problem I mentioned was from Codeforces: you have 300000 objects, with each object having two attributes: a value between -10

9 and 10

9, and a nonzero mask with at most 62 bits. Given another mask

s, we compute f(

s) as the sum of values of objects which have an even number of shared bits between their mask and

s minus the sum of values of objects which have an odd number of shared bits between their mask and

s. f(0), which is just the sum of all values, is not equal to zero. Your goal is to find any

s such that f(0)*f(

s) is negative, in other words to flip the sign of the sum.

Without loss of generality, let's assume that f(0) is positive, so our goal is to make the sum negative.

First, we can notice that the masks simply mean that we have 62 subsets of our objects, we're allowed to flip the sign of values in any of those subsets, and each object belongs to at least one subset.

Had the subsets been disjoint, the solution would have been easy: we could just flip the sign of all subsets which have a positive sum. Then all subsets would have a zero or negative sum, and we would have at least one negative subset since the total sum is not zero.

It turns out that our problem is almost as easy: let's fix an arbitrary order in which we will traverse the subsets, and say that each object

truly belongs only to the last subset that contains it, in that order. Then we can go through the subsets in that order, and flip all subsets which have a positive sum of values of objects that

truly belong to it. Note that the values we are summing here could have their signs flipped by the previous subsets that those elements belonged but not truly belonged to.

Since the object is never touched after we process the subset it truly belongs to, the total sum of values in the end is equal to the sum of sums of values of truly belonging objects for each subset as we process it, and all those sums are zero or negative by construction, and at least one of them is negative, so the overall sum is negative as needed.

Finally, an AtCoder problem was discussed as well: you are given at most 1000 groups of red balls, and at most 1000 groups of blue balls. Each group contains at most 10 balls in the same location, and the coordinates of those locations are integers not exceeding 10

9 by absolute value. The total number of red balls is equal to the total number of blue balls. Consider all possible matchings where each red ball is matched with one blue ball and vice versa. You need to find the maximum sum of Manhattan distances between the matched balls.

The naive min-cost-max-flow network would look as follows: edges from the source to each red group with capacity equal to the size of the group and cost 0, edges from each red group to each blue group with infinite capacity and cost equal to negative Manhattan distance between the points, and edges from each blue group to the sink with capacity equal to the size of the group and cost 0.

The negative Manhattan distance has the following form: -abs(

x1-

x2)-abs(

y1-

y2). Suppose we know how each absolute value expands (the signs of its argument), for example: -(

x1-

x2)+(

y1-

y2). This can be reformulated as (

y1-x1)+(

x2-

y2). Let's insert a vertex in the middle of this edge, and split its cost into two: the edge from the red group to this vertex has cost (

y1-x1), and the edge from this vertex to the blue group has cost (

x2-

y2).

So far we did not change much, but here comes the key idea: we have only four types of those intermediate vertices, corresponding to the four ways the absolute values can be expanded, and for all intermediate vertices of the same type the costs of the edges going into them from a fixed red group are the same (since the value (

y1-x1) does not depend on the blue group), and the costs of the edges from them to a fixed blue group are the same. Therefore the natural next step is to collapse all intermediate vertices of the same type into one, and any valid flow in the old network naturally corresponds to a valid flow in the new network with the same cost.

The new network has more valid flows, though: now it is possible for the flow to go from a red group to a blue group through a "wrong" type of intermediate vertex. However, such way has a higher cost than going through the correct type of intermediate vertex (since expanding the absolute value in the wrong way results in a lower value), and therefore will never appear in a minimum cost flow, so the value of the min-cost-max-flow in the new network is the same, and the new network has linear size!

Thanks for reading, and check back for more.